Printed from acutecaretesting.org

July 2007

Is there a QC Gap?

Process

We have prepared a survey that will help you see if you are performing QC the way the experts recommend – or if there is a gap between expert theory and your practice. Take the questionnaire below, then transfer your results into the attached spreadsheet. Please e-mail your spreadsheet to Zoe Brooks so the data can be analyzed, anonymously of course.

Please answer the questions truthfully – as reflected by your examination of the facts at this time (not what is planned or written or what you think should be done).

Results will be accepted up to 30 days from the date of publication of this article. We will e-mail you a consolidated report of the study findings, so you can benchmark your performance against other participants. We hope to complete the data analysis, send reports back to you and have a discussion forum in place with survey results within 45 days of the closing date.

Quality control - theory vs. practice

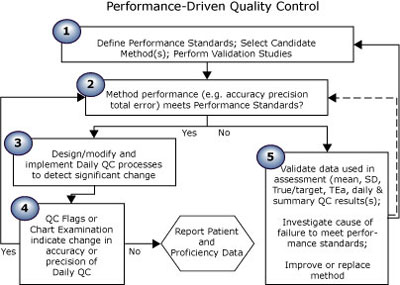

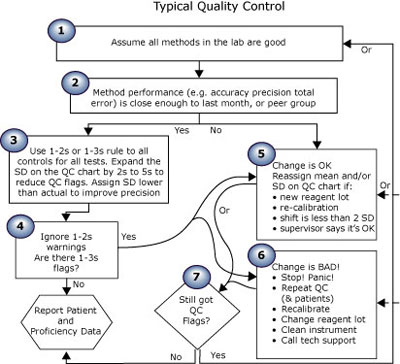

The first essay in this series (Quality control in theory and practicebr a gap analysis) compared performance-driven quality control (Fig. 1) (theoretical) to “typical” QC practice (Fig. 2). Read on; take the quiz to see where you rate!

FIGURE 1: Theoretical QC practice

FIGURE 2: Typical QC practice

The five steps of performance-driven quality control shown in Fig. 1 reflect the theory recommended by QC experts, as evidenced by the quotations from many well-known authors shown below.

|

PD-QC theory step 1: Define performance standards; select candidate method(s); perform validation studies. |

Expert theories generally agree on requirements for method selection and validation, including the application of performance standards. |

|

Research and select an analytical process that will produce results at a rate of x to y per day, at a cost of x to y each, with accuracy of x % and precision of y % Define performance standards for each analyte: if a sample has a true value of x units, reported results must be within y units or z % of that value |

Other critical method factors or characteristics, such as cost/test, specimen types, specimen volumes, time required for analysis, rate of analysis, equipment required, personnel required, efficiency, safety, etc., must be considered during the selection of the analytical method. [Westgard ] [1] For about 40 years, there has been a steady stream of publications concerned with the generation and application of quality specifications. There appeared to be a real conflict about how to set quality specifications, but a decisive recent advance was that a consensus was reached in 1999 on global strategies to set quality specifications in laboratory medicine. The hierarchy is shown below:

|

TABLE 1: PD-QC theory step 1

|

PD-QC theory step 2: Method performance (e.g. accuracy, precision, total error) meets performance standards? |

The experts have recommended application of performance standards (TEa limits) to method validation and quality control since the 1960s. |

|

Ensure performance standards are met before reporting any patient results |

One of the first recommendations for establishing quality standards was published by Tonks in 1963; these standards were presented in the form of allowable total errors. [3] In 1970, Cotlove et al utilized within-subject biological variation to derive standards for allowable SDs. [4] A few years later in 1974, Westgard et al formulated criteria, based on the medical usefulness of test results, which could be used to decide whether an analytical method has acceptable precision and accuracy. [5] |

TABLE 2: PD-QC theory step 2

|

PD-QC theory step 3: Design/modify and implement daily QC processes to detect significant change |

Expert theories have recommended choosing QC rules by comparing method accuracy and precision to defined performance standards for decades. |

|

Select QC samples. Select QC samples with analyte levels that monitor clinical decision points Verify that QC samples react the same way as patient samples (i.e. changes in accuracy or precision of patient results are reflected by proportional changes in accuracy or precision in QC sample results) Select a QC strategy consisting of frequency of testing, QC rules and processes to create and examine QC charts. Choose a QC strategy that will always detect changes that would cause any results to fail to meet the defined performance standard. Calculate the mean as an indicator of accuracy Calculate the SD or CV as an indicator of imprecision Assign the calculated mean and SD on the QC chart Calculate the critical systematic error (SEc) Select appropriate control strategy based on SEc |

The laboratory must establish the number, type and frequency of testing control materials, using the performance specifications established by the laboratory as specified in §493.1253(b)(3). [CLIA regulations] [6] We rely on controls to behave the same way as patient samples and detect errors in the analytical system. The ideal control material will:

"The appropriateness of an optimal QC strategy seems very much to depend on the quality required, as well as the expected instability of the analytical method (e.g., type, magnitude and frequency of errors)." [Wandrup ] [8] "The act of establishing your own means and SDs brings in the performance specifications observed in your own laboratory." [Carey] [9] “A laboratory metric that has been used to select and design QC procedures is the critical systematic error, SEc, which can be calculated from the tolerance or quality requirement defined for the test and the imprecision and inaccuracy observed for the method […] The implication is that the error detection capability of the QC procedure should complement the performance capability of the process. With high process capability, the errors that cause defective results will be large and more easily detected by statistical quality control. As process capability decreases, the errors that must be detected get smaller, which requires better detection capabilities for the statistical QC procedure.” [Westgard] [10] |

TABLE 3: PD-QC theory step 3

|

PD-QC theory step 4: QC flags or chart examination indicate change in accuracy or precision of daily QC? |

The experts agree that a well-designed QC chart and strategy will identify change in an analytical system. (Such change must be evaluated before patient results are reported.) |

|

Test QC samples. Plot all results on QC charts. Apply rules. Examine charts. If there are no QC flags, report patient results |

If none of the rules […] are violated, accept the run and report patient results. If any one of the rules [...] is violated, the run is out-of-control; do not report patient test results. [Westgard] [11] |

TABLE 4: PD-QC theory step 4

|

PD-QC theory step 5: Compare to performance standards; validate data; improve or replace method if necessary |

The very premise of quality control states that if change in method performance is unacceptable (no longer meets performance standards) you should stop reporting patient results. |

|

If QC flags indicate that the accuracy or precision of the method has changed, compare the mean and SD of the current data population to your performance standards If the changed analytical process still produces results within allowable limits of the correct/true value, adjust the QC process and carry on If your method no longer meets performance standards, then stop reporting results while you make sure the numbers are correct and take corrective action if indicated |

Results obtained for the performance characteristics (mean and SD) should be compared objectively to well-documented quality specifications, e.g., published data on the state of the art, performance required by regulatory bodies such as CLIA `88, or recommendations documented by expert professional groups. In addition, quality specifications can be derived from analysis of performance on clinical decision-making. [Fraser] [12] "To evaluate laboratory performance, the analytical imprecision and bias (obtained from the internal quality control protocol) is compared against the quality specifications (standards) for these two components of analytical error [accuracy and precision]. The analytical procedures that deviate from the standards have to be reviewed by laboratory professionals, and processes for improving performance implemented." [Ricos] [13] |

TABLE 5: PD-QC theory step 5

Ready to rate your qc practice vs. expert theory? Do you have a gap?

The following quiz will let you compare your QC practice to the theories presented above. Enter a number to indicate where your practice rates on a scale from the example described for a value of 0.0 to the example for a value of 5.0. Mark an "X" if this question does not apply or you do not know. Mark an “E” if you feel that your processes are equally effective but not the same as our example of a rating of 5.If you complete this quiz in the attached Excel file, the spreadsheet will add your score and provide an interpretation.

|

These are the 15 steps involved in performance-driven quality control: |

Score each step, where a value of "0.0" is similar to this example… |

Score a value of "5.0" if your practice is similar to this example… |

Enter your ra-ting here |

|

When selecting an analytical process, compare claims of accuracy and precision. These claims form an important component of the selection process. |

We do not include accuracy and precision on our criteria list. |

Accuracy and precision are the most important items on our criteria list. |

|

|

Define performance standards for each analyte that state "if a sample has a true value of x units, reported results must be within y units or z % of that value." |

Never. We do not have performance standards or TEa limits defined for any analyte. “This is a good lab. We are good people. Our methods are all good.” |

We set performance standards for all tests. | |

|

Ensure performance standards are met before reporting any results. |

Never. |

Always. |

|

|

Select QC samples with analyte levels that monitor clinical decision points. |

No. We just accept what the manufacturer provides. |

Yes. If necessary, we purchase separate controls or make samples. |

|

|

Verify that QC samples react the same way as patient samples (i.e. changes in accuracy or precision of patient results are reflected by proportional changes in accuracy or precision in QC sample results). |

No. We never verify changes in QC with patient samples, or monitor patient values. We don’t check for this when purchasing new controls. |

Yes. We always verify changes in QC with patient samples, and monitor patient values or moving averages. We check new QC samples for this before purchasing. |

|

|

Periodically calculate the mean of a defined set of QC points as an indicator of accuracy. |

No. We use a running mean. We don't examine or assess mean values on a regular basis. |

Yes. We calculate, examine and assess mean values on a regular basis. |

|

|

Periodically calculate the SD or CV of a defined set of QC points as an indicator of imprecision. |

No. We use a running SD. We don't examine or assess SDs or CVs on a regular basis. |

Yes. We calculate and examine and assess SDs or CVs on a regular basis. |

|

|

Assign the current calculated mean and SD on the QC chart. |

No. We assign a mean from history or the package insert or whatever. and/or… “The SD assigned on the chart is not the actual method SD; it comes from PT limits, or package inserts or we just multiply it a few times so we don’t get false QC flags.” |

Yes. We always assign the current calculated mean and SD on the QC chart.We rely on our QC strategy to alert us to change. When a shift in the mean occurs, we update the mean on the QC chart (after making sure the system still meets performance standards). |

|

|

Periodically calculate the margin for error or critical systematic error (SEc) for each QC sample. |

Never. |

Regularly. |

|

|

Select appropriate control strategies (frequency of testing, QC rules, and processes to create and examine QC charts) for each QC sample on each analyte based on margin for error (SEc). Choose a QC strategy that will detect changes that would cause results to fail to meet the performance standards defined for each QC sample. |

No. We use whatever QC software comes with our instrument or LIS or QC samples. We never compare QC results to performance standards. Or … “Someone (who cannot be questioned) decided to use a 1-2s or 1-3s rule for all controls on all tests.” |

Yes. We proactively select QC strategies and implement performance-driven quality control. We regularly compare QC results to performance standards and adjust the QC process if the method performance changes (as noted by a change in mean or SD of a new data set.) |

|

|

Plot all results on QC charts. |

Never. We don’t plot results. |

Always. All values. |

|

|

Apply rules, examine charts, and report patient results only if there are no QC flags. |

No. We report results and then someone examines the QC later. Or, we report all results – QC flags don’t make us stop reporting. The doctors need the results. |

Yes. We always check QC before reporting patients.We never report results on runs with QC rejects until the cause of the flag has been determined and we are sure the method still meets performance standards. |

|

|

If QC flags indicate that the accuracy or precision of the method has changed, compare the mean and SD of the current data population to performance standards. |

If we start getting a new mean, then that must be what the control should be now. Or ..“Change is OK if the supervisor says so."Or ..“If you can explain the change, it’s OK."Or ..“If the change is “not too big”, it’s OK.” |

If we start getting a new mean or SD, then we calculate Total Error and SEc to make sure the method is within allowable error. |

|

|

If the changed analytical process still produces results within allowable limits of the correct/true value, adjust the QC process and carry on. |

Sort of. We change the values on the chart whenever we start getting a new mean or SD. Then the chart looks better. Or .. No. Whenever there is a change we call for technical support. “All change is bad. It must be eliminated." |

If the method is within TEa, we change the mean or SD on the QC chart and, if advisable, adjust the QC rules and process. We realize change can be for the better – change is not always bad. |

|

|

If the method no longer meets performance standards, then stop reporting results while you make sure the numbers are correct and take corrective action if indicated. |

No. We never stop reporting results. The doctors need the results. |

Yes. We never release results that may be wrong, and therefore lead the clinician to the wrong decision and subsequent action, thus harming the patient. |

TABLE 6: 15 steps involved in performance-driven quality control

If you scored a perfect 5 on each question, your total score would be 75. Did you find a gap?

Please enter your rating in the attached Excel file and e-mail the completed file with the quiz and your comments so we can assess the overall existence and magnitude of the gap. Your privacy is 100% assured.

Conclusion

We hope that you will help prove or disprove and take the first steps toward closing this gap – by taking the time to submit this survey.

We have talked with many people who agree that this gap between QC theory and practice exists. The four prior essays ended with the words “Once again, these are my observations, and I truly hope that many of you will stand up and prove me wrong.” Not one person has stood up.

We realize that we may be opening a Pandora’s box. But if this gap does indeed exist, then it poses a danger to patient care, and it should be closed.

References+ View more

- Westgard JO. Method validation – real world applications. http://www.westgard.com/qcapp14.htm

- Fraser CG. Biological variation and quality for POCT. www.acutecaretesting.org, Quality assurance, Jun 2001

- Tonks, DA. A study of the accuracy and imprecision of clinical chemistry determinations in 170 Canadian laboratories. Clin. Chem. 1963;9:217-33.

- Cotlove E, Marris E, Williams G. Biological and analytical components of variation in long-term studies of serum constituents in normal subjects: III. Physiological and medical implications. Clin. Chem. 1970;16:1028-32.

- Westgard JO, Carey NR, Wold S. Criteria for judging precision and accuracy in method development and evaluation. Clin. Chem. 1974;20:825-33.

- CLIA regulations §493.1245 Standard: Control procedures

- Brooks, Z. Quality control – from data to decisions. Basic concepts, troubleshooting, designing QC systems. Educational Courses. Zoe Brooks Quality Consulting. 2003, (Chapter 2 Course 1 “What should we look for in QC material” contributed by David Plaut)

- Wandrup JH. Quality control of multiprofile blood gas testing. www.acutecaretesting.org, June 2001.

- Carey NR. Tips on managing the quality of immunoassays. http://www.westgard.com/guest4.htm#bio

- Westgard JO. Six sigma quality management and requisite laboratory QC. http://www.westgard.com/essay36.htm

- Westgard JO. QC - the multirule interpretation. http://www.westgard.com/lesson18.htm

- Fraser CG, Hyltoft-Petersen P. Analytical performance characteristics should be judged against objective quality specifications .Clin. Chem. 1999;45:321-23.

- Ricos C, Alvarez V, Cava F, Garcia-Lario JV, Hernandez A, Jimenez CV, Minchinela J, Perich C, Simon M. Analytical Quality Commission of the Spanish Society of Clinical Chemistry and Molecular Pathology (SEQC). Biological variation database, http://www.westgard.com/guest17.htm

Other references:

- Brooks Z, Wambolt C. Use some horse sense with QC. MLO March 2007 http://www.mlo-online.com/articles/0307/ 0307clinical_issues.pdf

- Online quizzes and discussions at www.zoebrooksquality.com/harmonize

- Westgard JO. Quality planning and control strategies. www.acutecaretesting.org, 2001.

- Westgard JO. A six sigma primer desc. www.acutecaretesting.org, 2002

- Westgard JO, Quam EF, Barry PL. Selection grids for planning QC procedures. Clin Lab Sci 1990; 3: 271-78

- Fraser CG, Kallner A, Kenny D, Hyltoft-Petersen P. Introduction: strategies to set global quality specifications in laboratory medicine. Scand J Clin Lab Invest 1999; 59: 477-78.

- Brooks, Z. Performance-Driven Quality Control. AACC Press, Washington DC, 2001 ISBN 1-899883-54-9

References

- Westgard JO. Method validation – real world applications. http://www.westgard.com/qcapp14.htm

- Fraser CG. Biological variation and quality for POCT. www.acutecaretesting.org, Quality assurance, Jun 2001

- Tonks, DA. A study of the accuracy and imprecision of clinical chemistry determinations in 170 Canadian laboratories. Clin. Chem. 1963;9:217-33.

- Cotlove E, Marris E, Williams G. Biological and analytical components of variation in long-term studies of serum constituents in normal subjects: III. Physiological and medical implications. Clin. Chem. 1970;16:1028-32.

- Westgard JO, Carey NR, Wold S. Criteria for judging precision and accuracy in method development and evaluation. Clin. Chem. 1974;20:825-33.

- CLIA regulations §493.1245 Standard: Control procedures

- Brooks, Z. Quality control – from data to decisions. Basic concepts, troubleshooting, designing QC systems. Educational Courses. Zoe Brooks Quality Consulting. 2003, (Chapter 2 Course 1 “What should we look for in QC material” contributed by David Plaut)

- Wandrup JH. Quality control of multiprofile blood gas testing. www.acutecaretesting.org, June 2001.

- Carey NR. Tips on managing the quality of immunoassays. http://www.westgard.com/guest4.htm#bio

- Westgard JO. Six sigma quality management and requisite laboratory QC. http://www.westgard.com/essay36.htm

- Westgard JO. QC - the multirule interpretation. http://www.westgard.com/lesson18.htm

- Fraser CG, Hyltoft-Petersen P. Analytical performance characteristics should be judged against objective quality specifications .Clin. Chem. 1999;45:321-23.

- Ricos C, Alvarez V, Cava F, Garcia-Lario JV, Hernandez A, Jimenez CV, Minchinela J, Perich C, Simon M. Analytical Quality Commission of the Spanish Society of Clinical Chemistry and Molecular Pathology (SEQC). Biological variation database, http://www.westgard.com/guest17.htm

Other references:

- Brooks Z, Wambolt C. Use some horse sense with QC. MLO March 2007 http://www.mlo-online.com/articles/0307/ 0307clinical_issues.pdf

- Online quizzes and discussions at www.zoebrooksquality.com/harmonize

- Westgard JO. Quality planning and control strategies. www.acutecaretesting.org, 2001.

- Westgard JO. A six sigma primer desc. www.acutecaretesting.org, 2002

- Westgard JO, Quam EF, Barry PL. Selection grids for planning QC procedures. Clin Lab Sci 1990; 3: 271-78

- Fraser CG, Kallner A, Kenny D, Hyltoft-Petersen P. Introduction: strategies to set global quality specifications in laboratory medicine. Scand J Clin Lab Invest 1999; 59: 477-78.

- Brooks, Z. Performance-Driven Quality Control. AACC Press, Washington DC, 2001 ISBN 1-899883-54-9

May contain information that is not supported by performance and intended use claims of Radiometer's products. See also Legal info.

Acute care testing handbook

Get the acute care testing handbook

Your practical guide to critical parameters in acute care testing.

Download nowScientific webinars

Check out the list of webinars

Radiometer and acutecaretesting.org present free educational webinars on topics surrounding acute care testing presented by international experts.

Go to webinars